The approach to designing a GeoEvent Service should mirror the development of a geoprocessing model in ModelBuilder in ArcGIS Pro. Essentially, you are defining a series of workflow operations connected by a processing flow. These operations may occur in series or in parallel, depending on how you lay out an event processing workflow. The decisions you make will directly impact the number of event records you can reasonably expect to pass through a GeoEvent Service each second. To get an idea of the number of event records a GeoEvent Service can reasonably process, the number and complexity of workflow operations must be considered as a whole.

Remarque :

Step-by-step instructions for creating a GeoEvent Service are included in the Introduction to GeoEvent Server Tutorial. You can also access the tutorial from GeoEvent Server tutorials.

Every operation included in a GeoEvent Service requires some amount of processing time to complete. A filtering operation, for instance, may take only a millisecond (or less) to compare an event record’s attribute value against a threshold to decide whether the event should be discarded or passed to the next processing step. While a single feature enrichment operation may take up to 100 milliseconds to process and complete. Whether your operations are trivial like the filter or highly latent like the feature enrichment, there are only 1,000 milliseconds in any given second.

Consider the following example: Suppose the total latency imposed by the filters and processors in a GeoEvent Service added up to 10 milliseconds. That service would only be able to route 100 event records through a GeoEvent Service each second (1,000 ms in each second divided by a cost of 10 ms per event record, yields 100 event records per second).

In summary, the more filters and processors used in a GeoEvent Service, the more complex the GeoEvent Service becomes. The more complex a GeoEvent Service is, the more latency is introduced with each operation and the fewer event records you can process each second. The more GeoEvent Services you design and publish, the fewer event records GeoEvent Server overall will be able to process.

Message bus in GeoEvent Server

All GeoEvent Services share a common underlying message bus. The GeoEvent Services you design, therefore, compete with one another for the opportunity to take event records from an input’s topic queue and begin running their processing workflow.

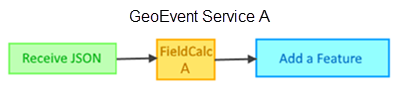

For example, consider the following simple GeoEvent Service A:

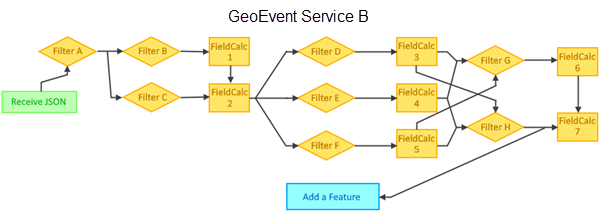

Running alone, GeoEvent Service A can process several thousand events per second on average. Now suppose you configure a second, very complex GeoEvent Service, GeoEvent Service B below. This service uses the same input and output as GeoEvent Service A.

When GeoEvent Service B is started, it is likely that the overall event record throughput will decrease from thousands of event records per second to only a few event records per second for each GeoEvent Service. Because the two GeoEvent Services share the same input and output, when the more resource-intensive GeoEvent Service is started, competition on the single message bus drives event throughput for both GeoEvent Services down.

Streamlining or simplifying GeoEvent Services

A frequently asked question is whether it is better to model an event processing workflow through several processors and filters—or whether it is better to simplify the event processing workflow to eliminate routes and forks. For example, in the complex GeoEvent Service B illustrated above, there are 12 routes an event could take before arriving at the output.

The question, would splitting this GeoEvent Service into 12 services provide better performance? In the current versions of GeoEvent Server, the answer is likely no (for the exception, see the Parallel vs. input filtering section below). For performance reasons, it typically does not matter whether an event processing workflow uses a single complex GeoEvent Service or is broken out into separate GeoEvent Services.

However, there are compelling reasons for using several simple GeoEvent Services rather than one complex service: maintainability and debugging. Complex GeoEvent Services can be overwhelming and it can be hard to understand the logic. Maintaining a GeoEvent Service can become increasingly difficult as its processing complexity increases, especially when many users are responsible for the task. Furthermore, validating and debugging a complex GeoEvent Service can be difficult. Splitting and simplifying processing workflows into several simple GeoEvent Services makes it easier to maintain and validate desired results.

Parallel vs. input filtering

When several filters are used in a GeoEvent Service to make a series of decisions, you can design a GeoEvent Service whose filtering operations would seem to be performed in parallel. However, a design that routes event records to either a set of processors or filters in parallel should be avoided when possible.

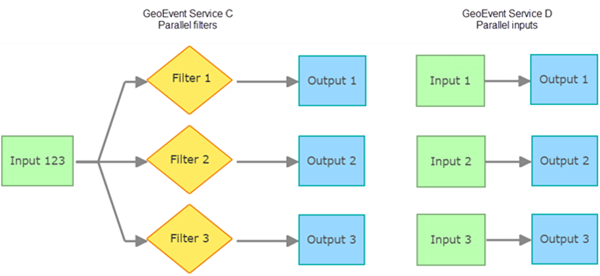

Consider the two GeoEvent Service designs illustrated below:

Suppose the inputs in the GeoEvent Services illustrated above were polling an external website to retrieve data whose records are one of three types—cars, light trucks, and commercial trucks. Each vehicle type needs to be processed differently, so in GeoEvent Service C, three filters are used to separate the vehicles into groups by type. To perform the specific discrete filtering operation, the GeoEvent Service must route a copy of every event record to each filter. Essentially, a full set of event records is routed to each of the three separate filters. That means it is filtering three times as many records on the underlying message bus.

Each filter is configured to select some portion of the event records it receives, discard event records that do not satisfy its criteria, and route the event records of interest to an output. Each filter must handle its own copy of the data to make its decision about which event records to keep and which event records to discard.

Consider the following example: Assume Input 123 ingests 1,000 events per second, GeoEvent Service C has to handle 3,000 events each second. The increased volume will limit the number of overall operations GeoEvent Server can perform on inbound event data each second across all configured GeoEvent Services, as a single message bus is used for all inputs, outputs, and GeoEvent Services.

GeoEvent Service D performs the same overall operation, but without any configured filters. Instead, the burden of separating the event data into different groups is handled by three separate inputs dedicated to each type of event record. This GeoEvent Service design strategy is recommended when possible. Most web services allow data to be separated into different groups using query parameters. By configuring three inputs, each with a query parameter to poll for a specific type of vehicle, the GeoEvent Service can be simplified to eliminate routes in the event processing workflow.

Operation latency and cost

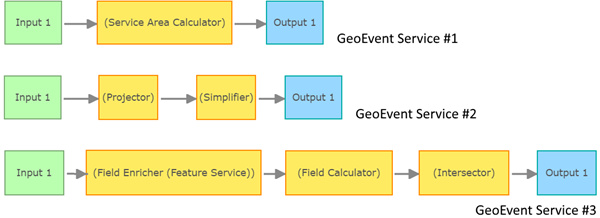

Understanding how filters and processors accomplish their configured operations is key to understanding the latency (and therefore the processing cost) associated with a GeoEvent Service. Consider the three GeoEvent Services illustrated below:

It would be easy to assume the GeoEvent Service with the most processors would have the highest operational cost. However, further examination is necessary.

GeoEvent Service #3 has the most processors, with a Field Enricher, a Field Calculator, and an Intersector processor used to compute the spatial intersection between an event record’s geometry and a geofence. Considering the operations being performed will reveal that a Field Enricher queries a feature service then caches the feature records. The first time an event record with a particular track identifier is received, there is a relatively large cost to retrieve the related feature, but after that first call, enrichment from the feature record cache held in memory has a very small cost. The string or arithmetic operations normally performed by a Field Calculator are also generally inexpensive. Geofence geometries are also held in memory to make spatial operations as fast as possible. So, the three processors in GeoEvent Service #3 do not have significant latency or cost.

In GeoEvent Service #2, there are two types of geometry processors. The first is a Projector processor that takes an event record’s geometry and projects it to a different spatial reference and coordinate system. If the event record’s geometry is a complex polygon with many vertices, a geometric projection can be a relatively costly operation. The second processor, a Simplifier processor, performs a geometric simplification to remove and possibly reorder extraneous bends and vertices while preserving a geometry’s essential shape. Once again, for larger, more complex geometries, this can be a relatively costly operation since it cannot use cached data to perform its operation. For smaller, less complex line or polygon geometries, this GeoEvent Service might perform very well. But for larger, more complex line or polygon geometries, the latency could be much higher.

GeoEvent Service #1 is the simplest GeoEvent Service with only one processor; however, it is potentially the costliest. The Service Area Calculator processor is not an out-of-the-box processor in GeoEvent Server but is available as a sample processor you can create using the GeoEvent Server SDK. This processor uses a Network Analyst service to compute a polygon whose boundary represents the amount of time to drive from a central point to any point on the polygon’s perimeter. It takes considerable time (seconds, not milliseconds) for the geoprocessing service being invoked by the custom processor to analyze the street network and compute the drive time polygon. Add to the compute time, the network latency of making the request to the Network Analyst service and receiving a response. This is not the sort of operation you would want to perform if you were receiving more than a few events per minute.

To summarize, GeoEvent Service #1 appears to be a simple GeoEvent Service, but once you understand the processing being performed by that one processor, it is easy to see why it has the highest latency cost among the three examples.

Vous avez un commentaire à formuler concernant cette rubrique ?